The DALL-E 2 system conjures images of the impossible so vividly that you could be excused for wondering if Johannes Vermeer really did paint another version of Girl with a Pearl Earring. You also could be excused for a dumbfounded stare at Gappes, a gourmet box of bizarre little grapes that is DALL-E 2’s photo interpretation of “a box of Grape-Nuts on a grocery store shelf."

DALL-E is one of the most advanced image-generating AI systems to date. OpenAI's text-to-image model first dazzled the tech world in January 2021 by producing professional-looking art and hyper-realistic photos from natural language prompts. The higher-resolution DALL-E 2, released in April 2022, generates images so true to reality you can see the nooks and crannies of waking life: tiny fissures in plaster, distinct blades of grass, the nubby texture of a cheetah’s fur.

Many of those who’ve experimented with the DALL-E 2 model or seen it demonstrated say the technology is poised to upend digital art as we’ve known it.

"It's incredibly powerful," Hany Farid, a digital forensics expert at the University of California, Berkeley, told NPR. "It takes the deepest, darkest recesses of your imagination and renders it into something that is eerily pertinent."

Fast Company's Jesus Diaz concluded that almost any image was possible: “You can imagine a dramatic love story between John Oliver and a cabbage or turn David Bowie’s lyrics into surrealist artwork worthy of an album by Ziggy Stardust himself.”

But several early access users, including research scientist and blogger Jannelle Shane, explain that DALL-E 2 is not a one-and-done image generator. In fact, Shane has reported, the system often requires some odd tweaks before it can deliver desired results. She’s also come to a few dead ends. In other words, this incredibly impressive development in artificial intelligence is still, at its core, a new machine with kinks to be worked out.

DALL-E – its name a play on the techie-beloved Pixar film WALL-E and Surrealist painter Salvador Dalí – is available by invitation only. The original model was made available to a small group of researchers, academics, journalists, and artists. OpenAI announced earlier this year that 1 million users will gradually be given access to DALL-E 2. Twitter and Reddit have been ablaze with speculation on a larger public release, though OpenAI has announced no such plans.

The DALL-E and DALL-E 2 models are the result of a massive lift-off involving a ginormous data set, multiple levels of technology and the creation of extra-high security walls. OpenAI began by trawling wide cross-sections of the internet, eventually amassing a database of 650 million image-text pairs. With this information trove at its virtual fingertips, the DALL-E model learned the relationships between images and the words used to describe them. Determined to drastically limit opportunities to generate deepfakes and malicious imagery, OpenAI says it filtered out as much violent, sexual, and hateful content as possible from the data set, conceding that there could still be breakthroughs.

“The model isn’t exposed to these concepts,” OpenAI researcher Mark Chen told IEEE Spectrum, the lead publication of the Institute of Electrical and Electronics Engineers, “so the likelihood of it generating things it hasn’t seen is very, very low.”

Once the encoder model was trained, OpenAI needed a decoder. The company reverse engineered its CLIP system, which originally generated text from image prompts. Using a process called diffusion, DALL-E 2 images begin as a random pattern of dots, which are slowly moved around to create a picture. The combined digital brains of the components generate images within 20 seconds of text input.

Alberto Romero, an analyst at Cambrian AI Researcher and regular contributor to tech news site Toward Data Science is impressed:

“Before DALL-E 2 we used to say ‘imagination is the limit.’ Now, I’m confident DALL-E 2 could create imagery that goes beyond what we can imagine. No person in the world has a mental repertoire of visual representations equal to DALL-E 2’s. It may be less coherent in the extremes and may not have an equally good understanding of the physics of the world, but its raw capabilities humble ours.”

DALL-E 2's grasp of physics is the wheelhouse of the aforementioned scientist Janelle Shane. She has posted about DALL-E 2 repeatedly since she was granted early access to the updated model in April 2022. She’s found that generating a desired image requires quite a bit of trial and error. She’s also found some of DALL-E 2’s limits.

Shane, a research scientist at Boulder Nonlinear Systems and creator of the popular blog AI Weirdness, has documented these attempts in a series of fun but challenging experiments. First off, she’s had to put her text prompts through odd permutations to get usable images. In one instance, she had to add the word "horsie" to the end of captions used to generate images of bay horses that featured the characteristics of other animals.

Then there was "The Kitten Effect". In this experiment, Shane tested DALL-E 2’s ability to depict groups of figures, noting that “one thing I've noticed with image-generating algorithms is that the more of something they have to put in an image, the worse it is.”

DALL-E 2 had no problem delivering on Shane’s prompt for “a kitten in a basket.” The virtual furry babe peers out from a wicker nook with deep azure eyes and feathery strands of fur. But AI weirdness shows up when Shane types in the prompt: “two cute kittens in a basket.” The generated pair are cute and fluffy but their digi-eyes are wonky. One has visible sclera (not a thing with cats) and seems to be wearing eyeliner. The other has half-closed lids and irises the colour of a Game of Thrones Whitewalker.

By the time Shane gets to “ten cute kittens in a basket”, each cat has a different look: sleepy, drugged, evil. Those are the normal-looking ones. One kitten appears to be a full-on stuffed animal toy. Another has a grotesque, twisted koala-like head that is missing an eye and an ear. Other various kitten parts litter the scene.

“Note,” Shane wrote, “that the kittens are all happy and doing great because when you are a virtual kitten it doesn't matter if you have extra ear holes in the middle of your face.”

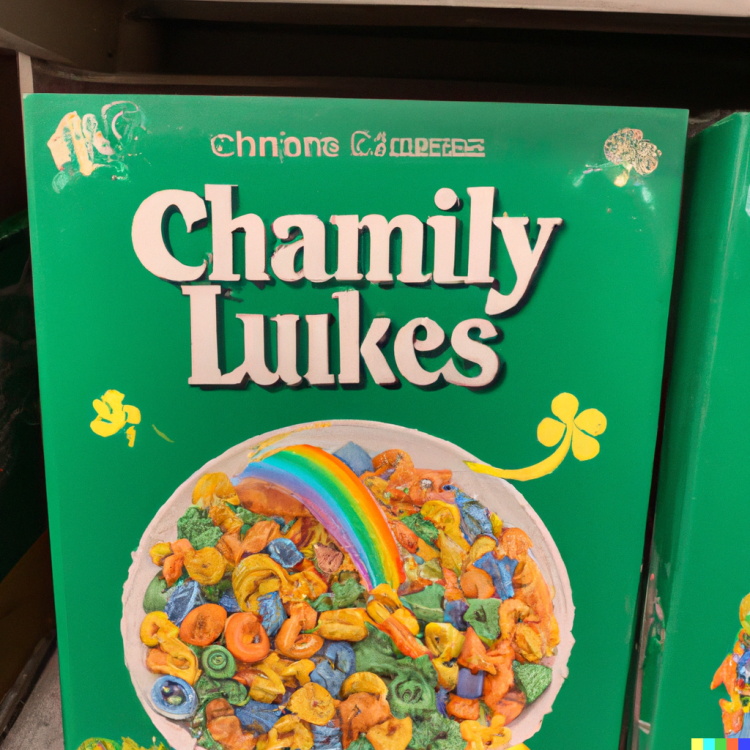

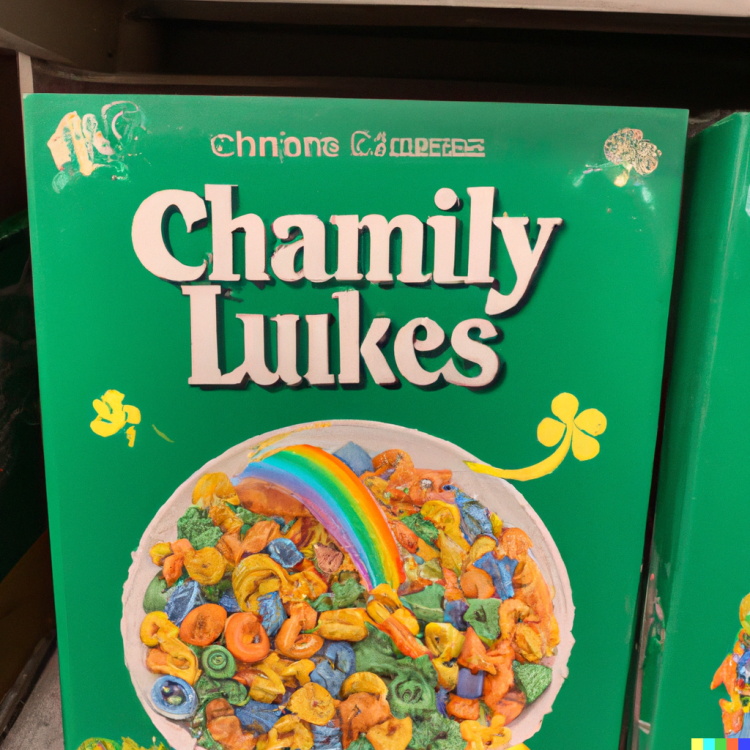

The current headlining post is "AI recreates classic cereals". This is where Gappes, the literal grape nuts, came to be. Shane goes to town, generating images from text prompts containing the names of cereals most Americans, at least, will recognize instantly: Lucky Charms, Fruity Pebbles, Frosted Flakes, and more. OpenAI concedes that DALL-E 2 often fails when it comes to generating images of real words, but Shane proved just how off-target the system could be. Prompted by "a box of lucky charms cereal on a grocery store shelf", DALL-E 2 spits out an image Chamily Lukes. The pine-green box of “cereal” features a “photo” of a bowl filled with rainbow roughage.

And then there is the question of art itself. Can one consider oneself an artist for placing text into a system that generates images? Wired’s Jessica Rizzo recently dug into the vast unknown of DALL-E 2:

“Setting aside the question of copyrightability, OpenAI is signalling to users that they are free to commercialise their DALL-E images without fear of receiving a cease-and-desist letter from a company that, if it wanted to, could hire a team of lawyers to annihilate them over ‘a portrait photo of a parrot sipping a fruity drink through a straw in Margaritaville.’

“If DALL-E and technologies like it are widely adopted, the ramifications for artistic production itself could be far-reaching. Artists who come to rely on DALL-E will be left with nothing if OpenAI decides to reassert its rights. While relatively few artists incorporate AI into their practice today, it’s easy to imagine future generations associating creativity with giving a simple command to a machine and being delighted by the surprising results.”

Photos courtesy of AI Weirdness

Tamara Kerrill Field is Kaiju's Managing Editor. Her writing and commentary on the intersection of race, politics and socioeconomics have been featured in USNews & World Report, the Chicago Tribune, NPR, PBS NewsHour, and other outlets. She lives in Portland, ME.